Why is "reading each article in detail" a dead end when writing a review article?

In the AI era, traditional linear reading methods are extremely inefficient:

- Low throughput: It takes two hours to read one article, and by the time I finished the fifth one, I had forgotten the first one.

- Notes are not searchable: There are only scattered highlights, no unified structure, and no evidence to be found when writing articles.

- The conflict was ignored: The fact that the samples of Paper A and Paper B were not found to be completely opposite meant that the opportunity to discuss the "boundary conditions" was missed.

- The citation lacks an "argumentative purpose": Citing is used to pad the numbers, not to support your point.

You need to transition from a "reader" to an "intelligence analyst".

Personal safety net process: Batch extraction + cross-validation

To write a high-scoring review article, you must establish an assembly line process.

Step 1: Create a batch of "metadata manifests"“

Do not open the PDF to read it. First, create a table in Excel or Notion and list the core fields:

- Theme

- Method

- Sample features

- Year

Step 2: Structured Extraction

Do not read the entire text. Extract only 4 "writeable" information blocks from each document:

- Claim: What is the author's core argument?

- Evidence: What data was used to support this? (P-value? Exact interview quotes?)

- Boundary conditions: Under what circumstances does this conclusion hold true? (For example, it only holds true in developing countries).

- Limitations: Does the author admit where he went wrong?

Step 3: Thematic Clustering

Don't follow the author's writing, follow...argumentWrite. Categorize the extracted information by "faction":

- Faction A: Research supporting the "positive correlation" (Paper 1, 3, 5).

- Faction B: Support research that is “unrelated” (Paper 2, 4).

Step 4: Cross-Validation

This is the key to getting a high score. Find the conflict points:

- “Why does Paper 1 say it's effective, while Paper 2 says it's ineffective?”

- “"Oh, so Paper 1 used a student sample, and Paper 2 used a sample of corporate executives."” This is your critical analysis!

Step 5: Output paragraph template

Directly applying the formula:

- consensus(Everyone agrees that X is important) → Disagreement(However, scholars debate how X influences Y) → Evidence comparison(Smith considered it positive, while Johnson considered it negative) → Cause Analysis(This may be due to sample differences) → Gap and Positioning(Therefore, this study will...).

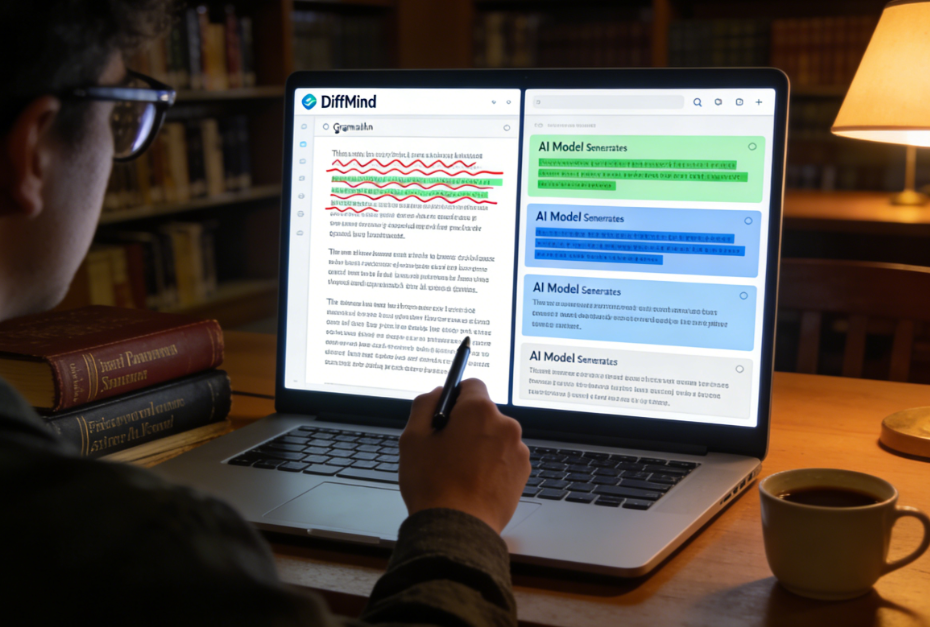

DiffMind Practical Application: Multi-model AI Helps You Complete "Intelligence Cleansing"“

Manually performing field extraction and cross-validation is very time-consuming.DiffMind(Multi-model AI comparison tool) is perfectly suited for this process.

1. Enhanced Question Query: Implementing "Field-Based Extraction"“

You can't just dump a PDF on an AI and say, "Summarize it." You need to utilize DiffMind's...Enhanced QuestioningConvert the commands into standard JSON fetch format:

“"As an academic assistant, please read the attached paper and output it strictly according to the following format:"

- Core_ClaimIn short, the core findings can be summarized in one sentence.

- Evidence_TypeQuantitative or qualitative?

- Key_DataCore statistics or citations.

- ContextResearch background (country/industry).

- GapThe author mentions future research directions. These notes can be directly copied and pasted into your Excel matrix.

2. Multi-model comparison: reducing misinterpretations and uncovering "hidden conflicts"“

A single model may miss crucial "constraints." In DiffMind, we analyzed the same paper using both GPT-4o and Claude 3.5 simultaneously:

- GPT-4o Skilled at extracting hard data (sample size, model parameters).

- Claude 3.5 They excel at capturing the author's tone and potential logical flaws. Practical skills: If the two models give inconsistent conclusions, it often means that the conclusions of this paper have complex limiting conditions, which is exactly where you need to manually intervene and read carefully.

3. AI Division of Labor Pipeline: From Clustering to Writing

Let different models play different roles:

- Model A (clustering): “"Here are the abstracts of 5 papers. Please divide them into two categories according to their research methods."”

- Model B (Find counterexamples): “"Regarding the view that 'social media causes anxiety,' please find evidence in the uploaded literature that refutes or limits this view."‘

- Model C (Comprehensive): “"Based on the above consensus and differences, write a 200-word literature review, requiring academic jargon and including citation placeholders."”

Conclusion

The essence of a literature review is not "reading notes," but rather "logical puzzle-solving." Through a strategy of "batch extraction + cross-validation," combined with DiffMind's multi-model capabilities, you'll no longer be a student drowning in a sea of PDFs, but a commander navigating information. This is the true essence. academic reading efficiency The ultimate mystery.